I’ve been working on Picture Superiority Effect some more. Actually, I got derailed by discovered Perceptual Learning, so technically, I’m doing that now. The two feel very similar, but I’m not sure yet whether they are.

Perceptual learning applications typically function by repeatedly presenting the learner with a stimulus, prompting rapid categorization or interpretation (“which plane is this?”, “which emergency does this UI state indicate?”). Through numerous repetitions, the learner becomes proficient at this task, even if they cannot explicitly articulate what they have learned.

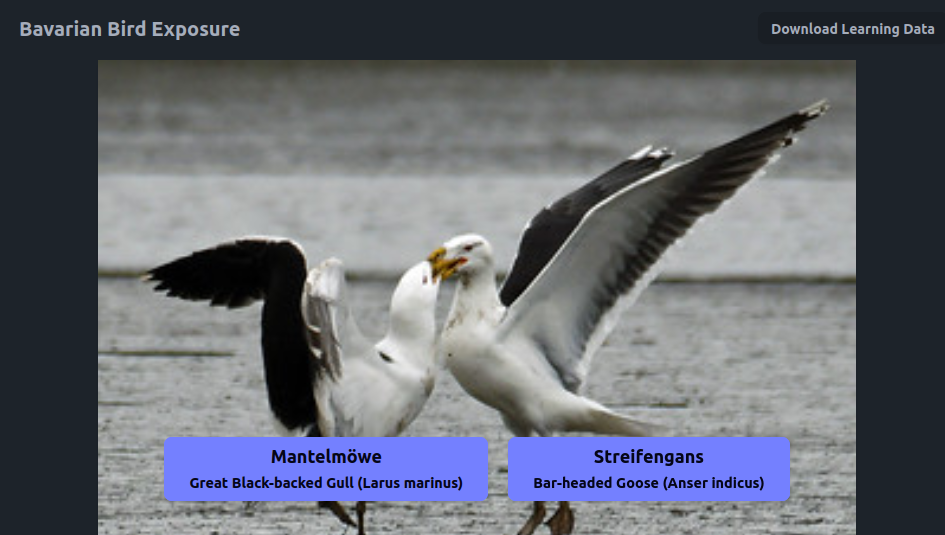

I’m currently playing around with a tool utilizing this approach to teach a classification task. Birds. Specifically, I have chosen to teach myself to identify birds in my area, leveraging freely available image (and bird) data from Avibase and Flickr.

Results are not in yet, not even preliminary ones. Instead, I want to share some findings discovered while building and self-testing the tool.

If you give the learner a binary choice, you are doing spaced repetition with two items at once

For my application, I opted to present an image of a bird along with two buttons; one with the name of the correct bird, and one with an incorrect one’s name (for example: an image of a crow and two buttons Buzzard and Crow).

Now, this may well be obvious, but it took me a test run to notice that any answer given in such a context is relevant data for the learner’s knowledge about both identifying buzzards and crows.

After all, if the learner correctly selects Crow in this scenario, they may have basing this decision on being very sure that it’s not a buzzard. In turn, a wrong choice implies a weak mental model for the looks of both animals.

That’s a neat time-saver in regards to items per time, but also introduces complications. I can never be sure whether the learner used their knowledge about crows, buzzards, both, or neither (guessing still yields 50% correct answers on average). The Ebisu algorithm’s noisy binary is interesting tooling for this, but I haven’t comprehensively solved this one.

In a binary choice situation, the frequency of an answer may be used as a heuristic

Similar to my map game, I assumed very low initial intervals in the range of seconds for new learning items (as in, a bird seen for the first time). Therefore, bird species guessed wrong often will come a lot, often almost back to back. Now, that’s all fine and dandy, but creates a problem when combined with a binary choice setup: At some point, I found myself just assuming that the more familiar answer would be the correct one, without consulting the picture much.

Something to keep in mind with this setup, although we are going to attack it in the next section.

Hard learning items can be used as the wrong answer in a binary choice setup

As promised, I found an angle to attack the above problem: I changed my code so that birds that were previously identified wrong often would be used as the wrong answer option in about 60% of trials.

Now, an average trial would have a “hard bird” as the correct answer, and another “hard bird” as the wrong answer. Thus “I have seen this name a lot” can no longer be used as a tool to guess which answer is likely correct.

Additionally, drawing on the first section, this has the advantage of strengthening conceptual borders for learning items that the learner found hard instead of just “wasting” the wrong answer with just any random item.

Presenting hard binary-choice exercises is not easy

My knowledge of birds is not particularly impressive. But it’s good enough to distinguish a duck from a hawk. Now, true expertise would of course include being able to tell which species of duck is presented — but that is not trained if I just have to pick between some duck and some hawk. Which is exactly the kind of choice that is often presented when the wrong answer is entirely randomly picked from the whole pool of birds.

This problem is very much dependent on the problem space. For example, in chicken sexing, the eternal example of everything ever written on expertise, it does just not occur. There are only two categories (male and female sex), which precludes any choice of options to present anyway, and the correct categorization is hard, which is what the whole thing is a about.

However, with bird-watch training, the problem is there. How do you pick two birds that are genuinely hard to tell apart?

One option is of course to extract these relationships of confusable birds from collected learning data, which I don’t have yet. This is essentially the cold start problem.

Another option, specific to this problem space, could be string distance — similar-looking birds may be more or less similarly named. I’m not sure about this, but I will explore it.

Conclusion

That’s all for now. I haven’t seen a real bird since starting this, but in the off-chance that you’re building something similar, I hope you took some value out of these early thoughts.

See you next time.